Analysis Package

Contents

Overview

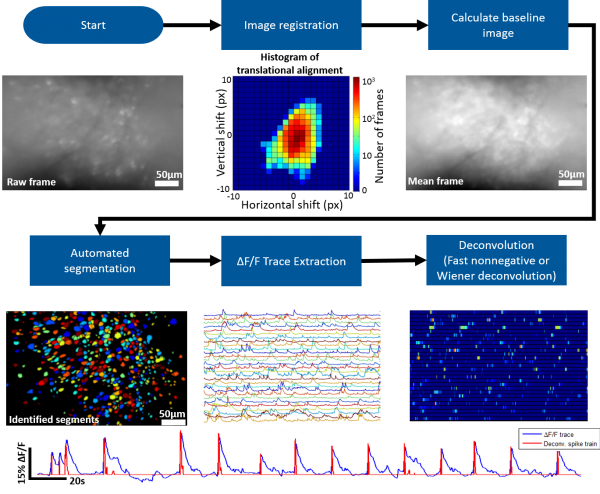

The MATLAB analysis package provided on this wiki contains the tools necessary for going from the raw microscope video to deconvolved individual neuron activity. Our package supports batch (generally run in parallel) processing for handling multiple recording sessions and can also be run on an individual session stored in the MATLAB workspace.

Roughly speaking the steps involved are the following

- Generate MATLAB data structure

- All access to the raw or processed data is handled through this data structure

- Memory efficient (less than 10MB per minute of recording)

- Correct for motion artifacts using an amplitude or FFT image registration algorithm

- Identify the bountries of individual neurons (we provide a novel, fully automated segmentation approach)

- Extract dF/F activity for identified neurons

- Deconvolve dF/F activity using Wiener or Fast Nonnegative deconvolution to approximate neuronal spiking pattern

- Additional Analysis:

- Cross-talk removal

- Segment matching across sessions

- Behavior tracking and syncing to microscope data

- Activity detection

The Miniscope Analysis Github repository can be found here.

Work Flow

A general work flow for processing and analyzing miniscope data can be found in msBatchRun.m for batch processing and msRun.m for single recording processing. This script will walk you through all the necessary functions to go from the raw data to approximate spiking activity of individual neurons. We suggest starting with msRun.m and walking through all steps for a single recording session. You can use msPlayVidObj(vidObj,downSamp,columnCorrect, align, dFF, overlay) to display a video of your data while applying certain aspect of the data processing workflow. An example use of this function to display an aligned dF/F video downsampled by a factor of 5 would be msPlayVidObj(ms,5,true,true,true,false).

Image Registration

We suggest using Dario Ringach's recursive image registration algorithm.

Automated Segmentation

Our MATLAB analysis package implements an novel fully automated segmentation algorithm. Roughly speaking the algorithm follows the following steps:

- Detect ΔF/F local maxima throughout recording and record their spatiotemporal location.

- For each spatial location where local maxima occurred, generate a pixel group, G_px, containing pixels at the center of this location.

- Calculate the cross correlation of G_px’s mean ΔF/F trace with the individual ΔF/F traces of surrounding pixels, limiting the ΔF/F traces to only the frames temporally close to nearby local maxima events.

- Pixels with a high correlation coefficient are added to G_px.

- Repeat until an iteration occurs where no more surrounding pixels are added to G_px.

dF/F Trace Extraction and Deconvolution

After segments (ROIs of each active neuron) have been identified dF/F traces can be extracted then deconvolved to approximate spiking activity of each segment. We prefer the the non-negative deconvolution approach of Vogelstein et. al..